High bandwidth memory is in rapidly rising demand to facilitate lightning-fast data transfers

Ah, remember the dial-up days, waiting an eternity for a grainy movie download? Now, those clunky chips are a bygone era. Today's whizz kids can whizz through more than 160 full HD movies in a blink – under one second, mind you!

The brains behind this lightning speed? High bandwidth memory, or HBM, a technology that was once scoffed at as a pricey pipe dream. Back in 2013, analysts said, "Nah, mate, not gonna fly." Fast forward to 2023, and American chip giants like Nvidia and AMD are giving HBM a second life, making it the backbone of all AI chipsets.

Why's the AI world drooling over HBM? It's all about data, guv'nor. Companies are cramming data centres with fancy AI systems, from chatbots to robots who write sonnets. These data-guzzlers need more processing power and bandwidth than a chip shop on a Friday night.

Traditional memory chips are starting to sweat under the pressure. Faster data means more chips, which means more space and more power guzzling. Imagine your flat overflowing with chips: not ideal for the wallet or the electric bill.

Here's where HBM throws a spanner in the works. Instead of the chip sprawl, HBM stacks them up like a biscuit tin pyramid. It's clever stuff, using tiny circuit boards thinner than a crisp to pack chips tighter than those sardines down at the docks.

This stacking trick is a game-changer for AI chipmakers. Chips close together mean less energy – HBM uses roughly three-quarters less than the old way. And research shows it gives you five times the bandwidth in half the space, a real boon for those cramped data centres.

It's not brand new tech, though. AMD and South Korean chip whiz SK Hynix were tinkering with HBM back in the day, when top-notch chips were all about gaming graphics. Back then, critics scoffed: "More speed? Not worth the extra quid!" By 2015, analysts were writing HBM off as a niche fad, too pricey for the masses.

They're still pricey, mind you, costing five times more than your average chip. But AI chips themselves are a pricey lot, and tech giants have deeper pockets than your average gamer.

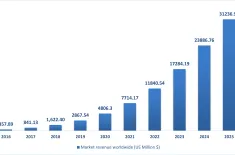

For now, SK Hynix has the upper hand, mass-producing the latest HBM3 for today's AI chips, with a hefty 50% market share. But Samsung and Micron are hot on their heels with their own upgraded versions coming soon. They all stand to make a killing off this high-margin, high-demand market. TrendForce even predicts a 60% global bump in HBM demand this year, and the AI chip war is set to send that even higher in 2024.

AMD just threw down the gauntlet with a new chip to go toe-to-toe with Nvidia. With a chip shortage and a thirst for an affordable Nvidia alternative, there's a golden opportunity for challengers to offer chips with similar specs. But toppling Nvidia's throne takes more than just hardware – it's about their software ecosystem, with all those fancy developer tools and programming models. That's a battle that'll take years to win.

So, for now, hardware – and the number of HBMs crammed into each chip – is king for those wanting to challenge Nvidia. More memory, more power, that's how AMD's playing the game, with their latest MI300 accelerator sporting eight HBMs compared to Nvidia's five or six.

The chip industry has always been a rollercoaster, boom and bust, like a Brighton Pier ride. But with this sustained demand for new, powerful AI chips, future downturns might be less of a white-knuckle affair. So, buckle up, tech fans, the HBM-powered AI revolution is just getting started!